It sounds like an obvious statement, but many managers looking to rationalize performance and capacity management activities to cut costs seem to miss the point. The thinking is that a real-time monitor collects performance data, so why not use it for everything relating to performance and capacity? I think there are some good reasons why not, and would be keen to hear of more from you.

SNMP data capture tends to be based on objects where a CPU is at the same level as a network switch which is at the same level as a JVM and so on. While this can be a useful methodology for providing real-time support, it really doesn't provide an organized view of the data required for capacity reporting.

Limited “user” data also means no meaningful workload components can be derived when building a capacity planning model. SNMP collection tells you ‘what’ happened, but not ‘who’ did it! It doesn’t help capacity analysis when there are unexplained peaks of usage but no comprehensive user data to indicate who was responsible for those peaks. When you call SNMP you can get data for processes running at that moment. However because of the rapid nature of command or process terminations in all environments, the capture ratio will be poor, i.e. you simply will not get to see what processes caused those peaks.

SNMP data sources are typically configured by a support group outside the capacity team and will be running on default values. This always means that the capacity planner has to compromise on the depth of data provided. All too often such sparse data can make their job next to impossible, even when working purely at the “system level” (e.g. no user, process or command data). By contrast the data collectors within full capacity management products such as Metron’s Athene software provide a rich source of data that has been custom built to meet the needs of the capacity analyst.

Any request to change the data provided via SNMP will usually require an external change control request. This request may be declined; it will certainly mean delay. With a specific capacity solution such as Athene, reconfiguration of data collection does not require external change control.

With SNMP capture each individual polling request adds a small number of bytes of network overhead. This constant polling of devices by SNMP can cause a significant amount of SNMP overhead in large environments. Also, each SNMP request will create overhead (CPU usage, for example) on the system where data is captured. While some overhead is unavoidable for any tool, using SNMP to capture data continuously has a higher likelihood for unwanted resource usage -- the price for not having data capture software specifically installed on the device that has been engineered for minimal overhead when capturing data.

I think of ‘full’ capacity management as encompassing the following, i.e.

· analysis and root cause identification of problems

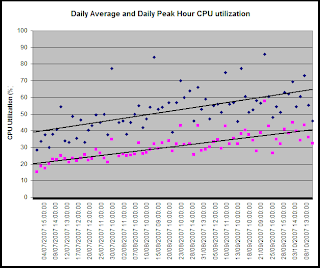

· regular (e.g. daily, weekly, monthly) reporting of performance and capacity issues

· prediction of likely performance problems as workloads grow or change

· prediction of likely capacity requirements as the business changes

If you have any thoughts about other reasons why SNMP capture is insufficient to help the capacity analyst achieve these tasks, please comment.

Andrew Smith

Chief Sales & Marketing Officer