Hyper-V dynamic memory in action

During the tests I found

some interesting observations with dynamic memory which I would like to share

with you.

High Pressure

I took this screenshot

when the tests were running. You can see that during the test a warning status

was activated, which alerted that memory demand of 1346 MB was exceeding the assigned

memory of 1077 MB, and at this point the memory balancer kicked in. This was a

point in time where pressure would have shown as over 100 on our chart.

Low Pressure

From this screenshot you

can see that assigned memory is set at 2352 MB but demand is well below that –

in this case the balancer will take away memory as it was not required.

Random I/O on Dynamic Disks

A comparison below

confirms the problem I encountered with random I/O on dynamic disks and the

fixed disk performed much better.

Conclusions, Caveats, and Final Thoughts

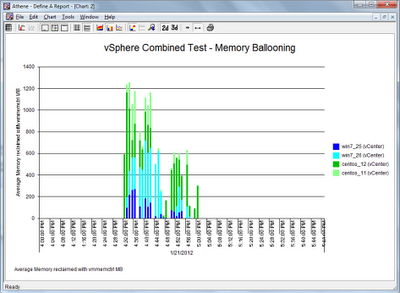

The overall the combined results for vSphere and Hyper-V were surprisingly

close.

Individual tests produced some

interesting findings

·

Windows CPU performance on Hyper-V was significantly

slower

·

Two vCPUs running a single process had little negative

impact

·

Random I/O on a Hyper-V dynamic disk had terrible

performance

·

Hyper-V dynamic memory worked great with no

performance penalty, from a management perspective a really good feature.

Caveats

·

Workloads were very general and dependent on perl

implementation

·

Many more variables could be taken into account

·

Result will be different on other hardware

That wraps up my vSphere vs Hyper-V showdown series - remember running benchmarks in your own

environment should be done to help you make the best informed decisions.

Dale Feiste

Consultant